|

|

|

My Research

interests are:

|

|

|

|

Optical Markerless Motion Capture and Pose

Estimation

2D-3D Pose Estimation

Real-Time

Tracking

and Multidisplay Rendering

Augmentation (AR),

Panoramas and Real-Time Graphics

|

|

|

|

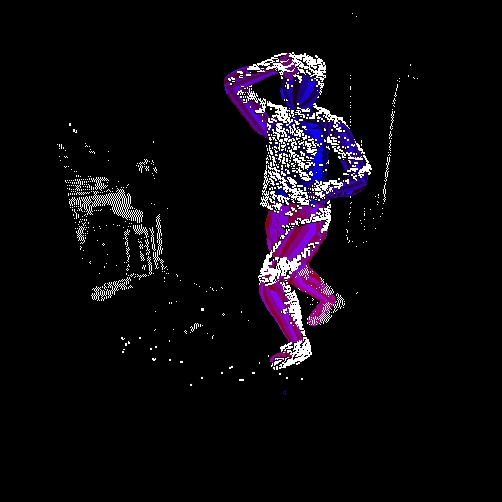

Articulated ICP from

Stereo Images (see PhD thesis)

To apply the developed pose

estimation algorithm, correspondences between observed 3D points

and 3D model points are necessary. As the

observed points are calculated from depth maps, these correspondences

are not known.

Similar to other tracking systems an Iterative Closest Point (ICP)

approach is taken. For each

observed point, the nearest point on the model is assumed to be the

corresponding one. With these

correspondences the body pose of the model is calculated.

The following steps are repeated multiple times for each frame of a

sequence. The larger the relative motion is, the more correspondences

will be

incorrect. The principle idea of ICP is, that an estimated relative

motion decreases the

difference between model and observation, such that more

correspondences are correct, if built again for the smaller difference

in the next iteration.

The steps in one ICP iteration are:

a Render model in

current pose with a unique color for each triangle (OpenSG).

b Find visible

model points by analyzing the color of rendered image pixels.

c Build

correspondences from the depth map by finding nearest model point.

d Estimate relative

motion from 3D-point-3D-point correspondences by Nonlinear

Least Squares.

|

|

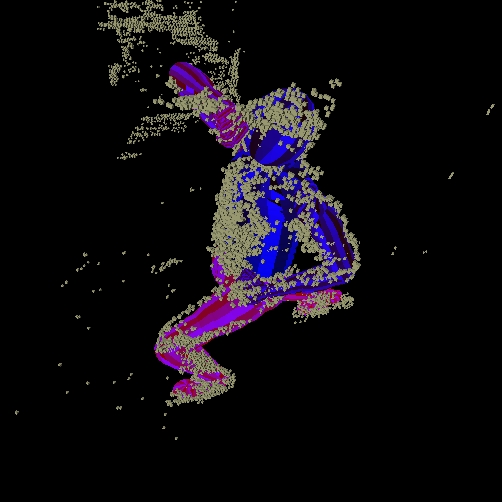

Two

views on the stereo input data(white boxes) for one image frame.

The articulated ICP estimates joint angles, such the surface

of the human model is fitting as close as possible to the input data.

Correspondences are found by using the closest model point for each

input point.

Video(17MB wmv): The

resulting sequence (24 DOF).

|

|

Human

Models

There

are a lot of different human models with different movement

capabilities, where usually each one is designed for a spcecific case.

Though no commonly used standard of human animation models exists, the

MPEG group defines a rather general model in the

MPEG-4 specification , which is basically the H-Anim body

specification. The H-Anim model is the basis for my work and gives

the opportunity to use MPEG4-BAP-files (Body Animation Parameter) for

animating characters.

The images show two H-Anim models in VRML format, which can be loaded

and viewed by openSG.

For tracking in real-time all 180 degrees of freedom in the MPEG-4 body

model are not necessary. Additionally the geometry of the models should

be as simple as possible for fast processing of visible points,

building correspondences etc.

Therefore

we made a simple body model ourselve, that has 39 degrees of freedom,

as shown on the right. The amount of triangles is also greatly

reduced as compared to the one on the left.

The possible movement capabilities are shown as arrows,

each arrow represents a rotational joint axis. The key of our approach

is, that the motion can be described by rotational angles around known

axes sufficiently.

|

|

|

|

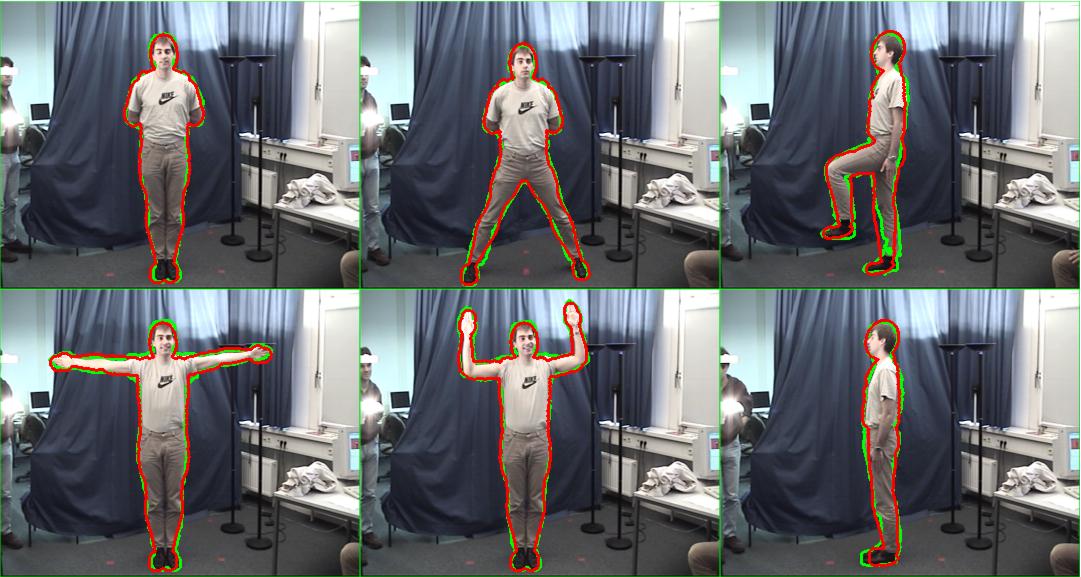

Model

Fitting

To

track the motion of a specific person, it is necessary in our approach

to have a model of the person that fits as good as possible. We

developed a fitting algorithm that estimates scale values for

each body part from a template model. The fitting is necessary for each

person. We are using only one single camera (see publications: Human

Model Fitting from Monocular Posture Images).

To overcome the the lack of depth information (single camer), the

person has to

strike six different postures as shown on the right. The approach is based

on silhouette information and minimizes differences between model and

template silhouettes by non-linear least squares minimization

solved with the Levenberg-Marquardt method(up to 100 parameters). The

optimization estimates parameters for all 6 images simultaneously.

|

|

|

|